Master of Art Final Project. Real-time face tracking and projection mapping for an immersive narrative experience.

Background

The objective of this project is to utilise technology to create a live performance that conveys the story of schizophrenia in an immersive manner. This project will use projections to highlight dancers' movements and thoroughly immerse the audience in the story of schizophrenia, a misunderstood and frequently stigmatised mental disorder that affects both sufferers and their loved ones. By portraying the picture to viewers from a different angle and increasing public awareness of schizophrenia, OP ("PANTOMIMUS") hopes to achieve these goals.

Challenge

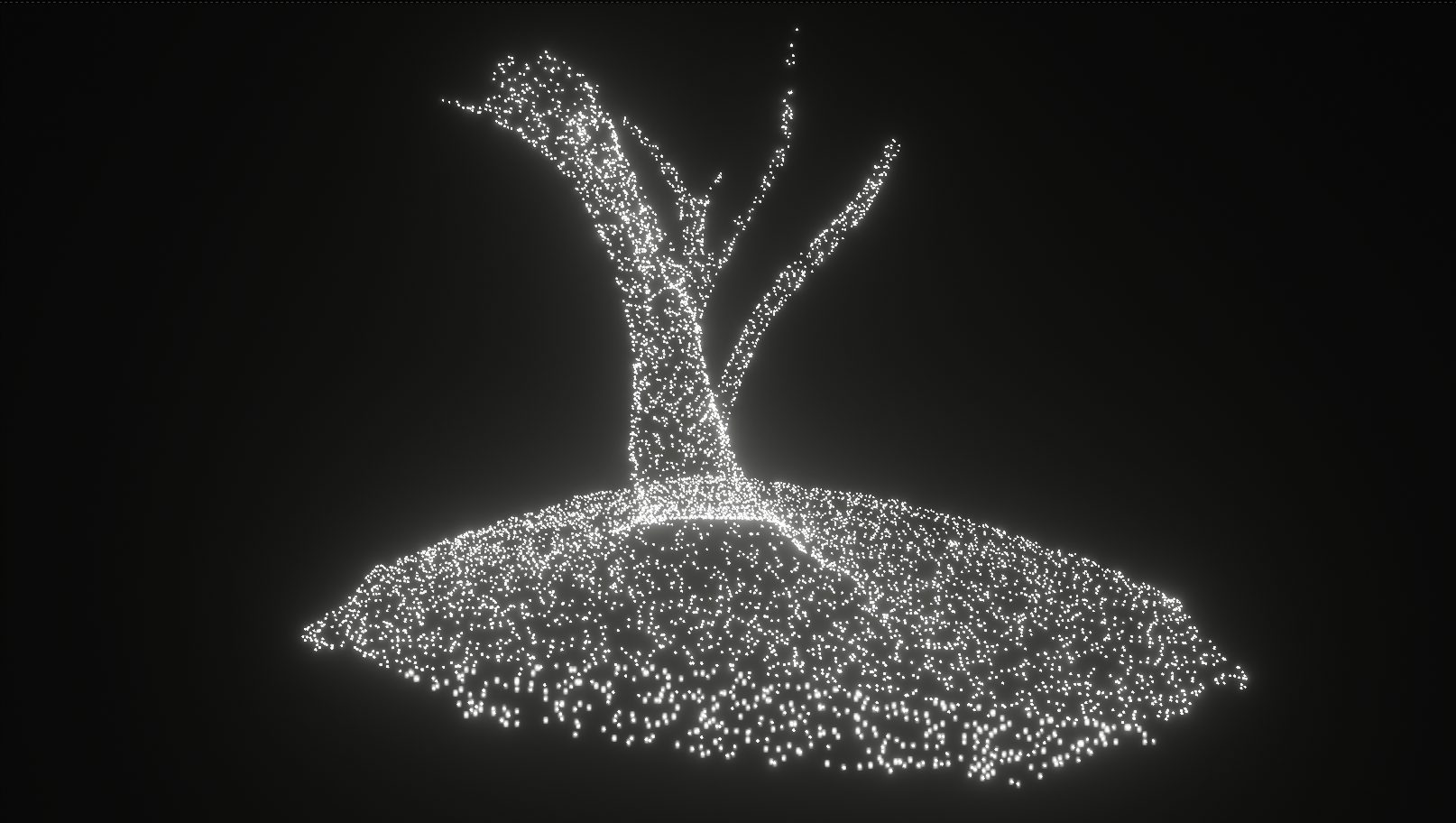

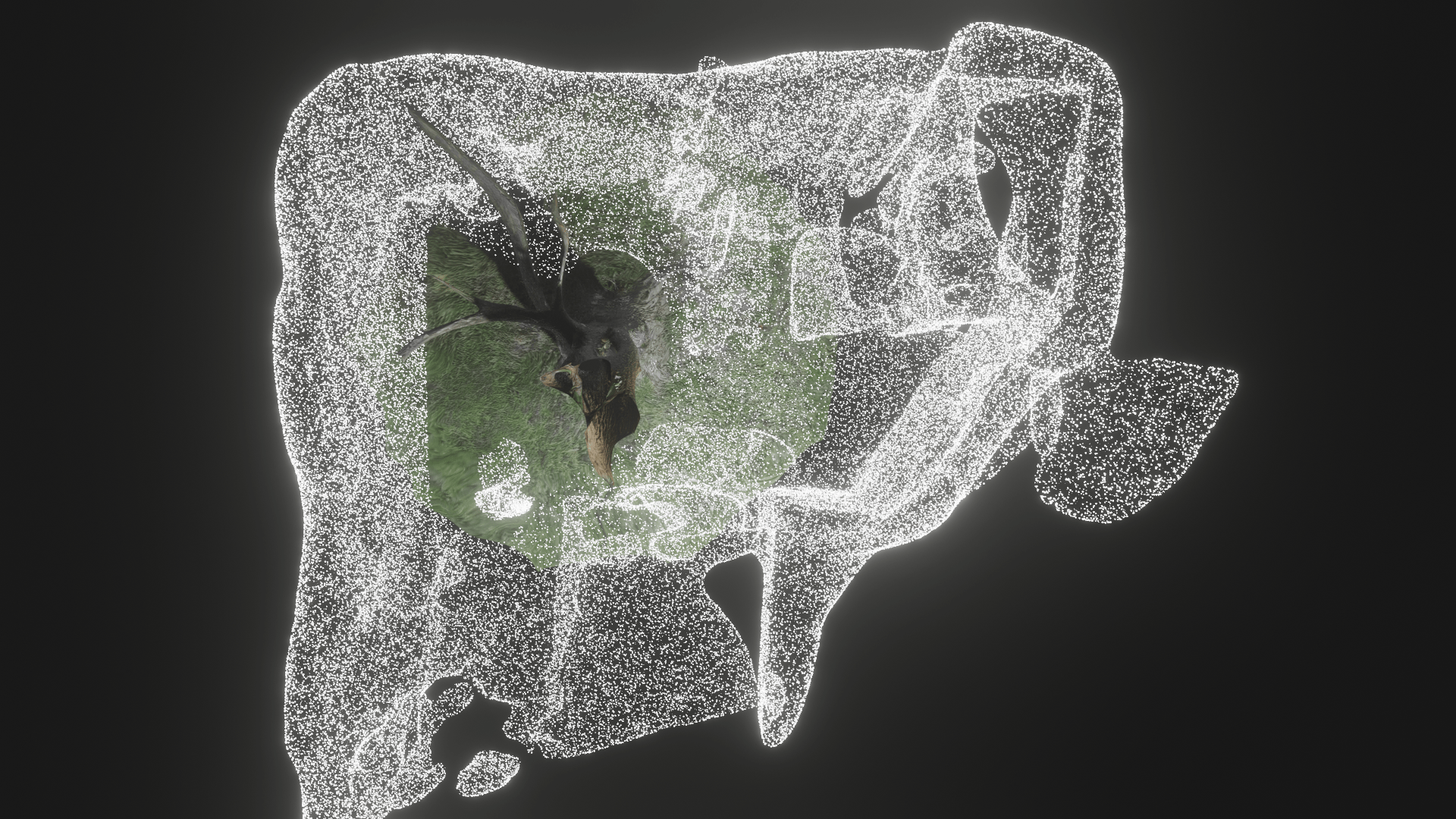

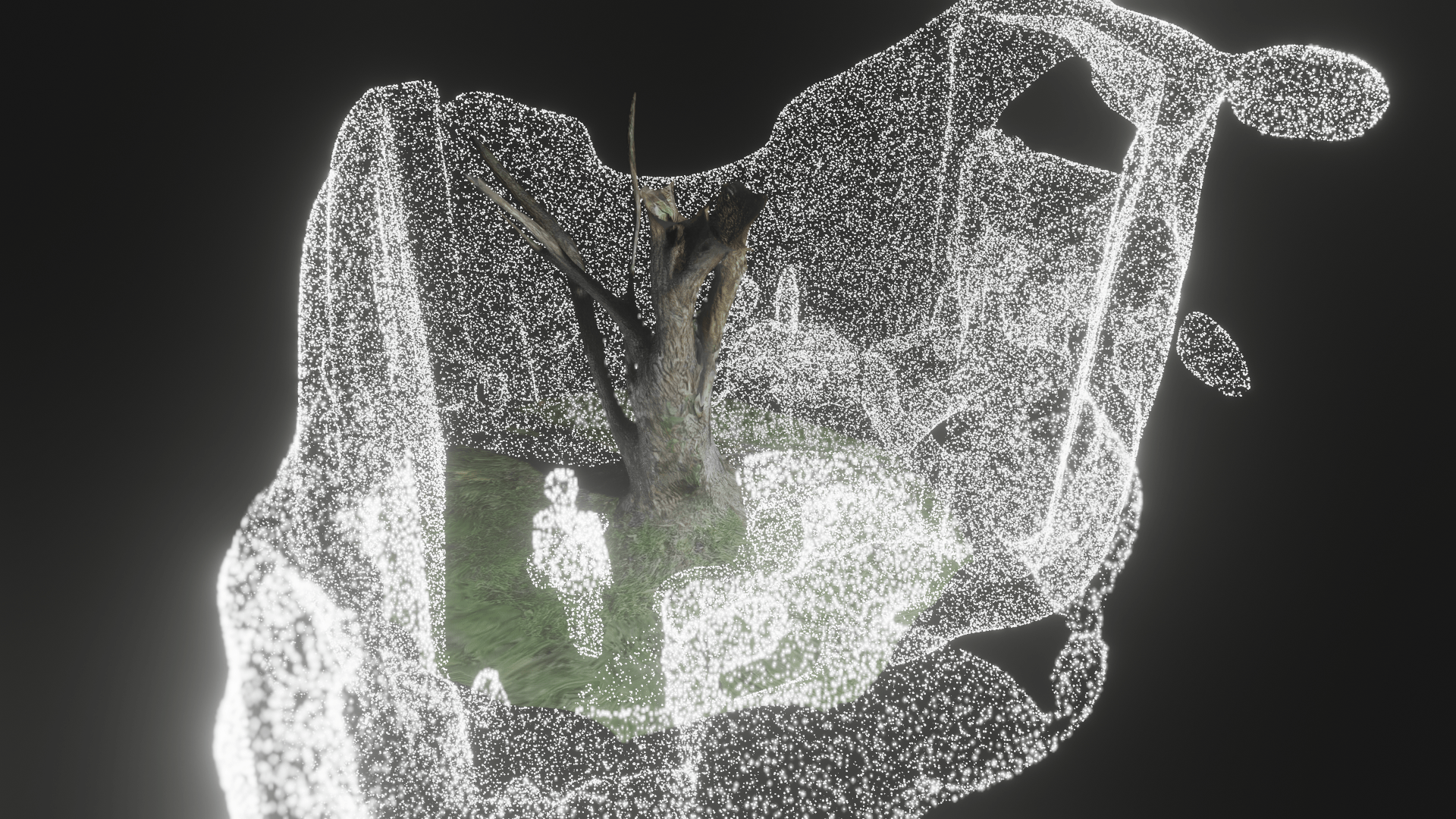

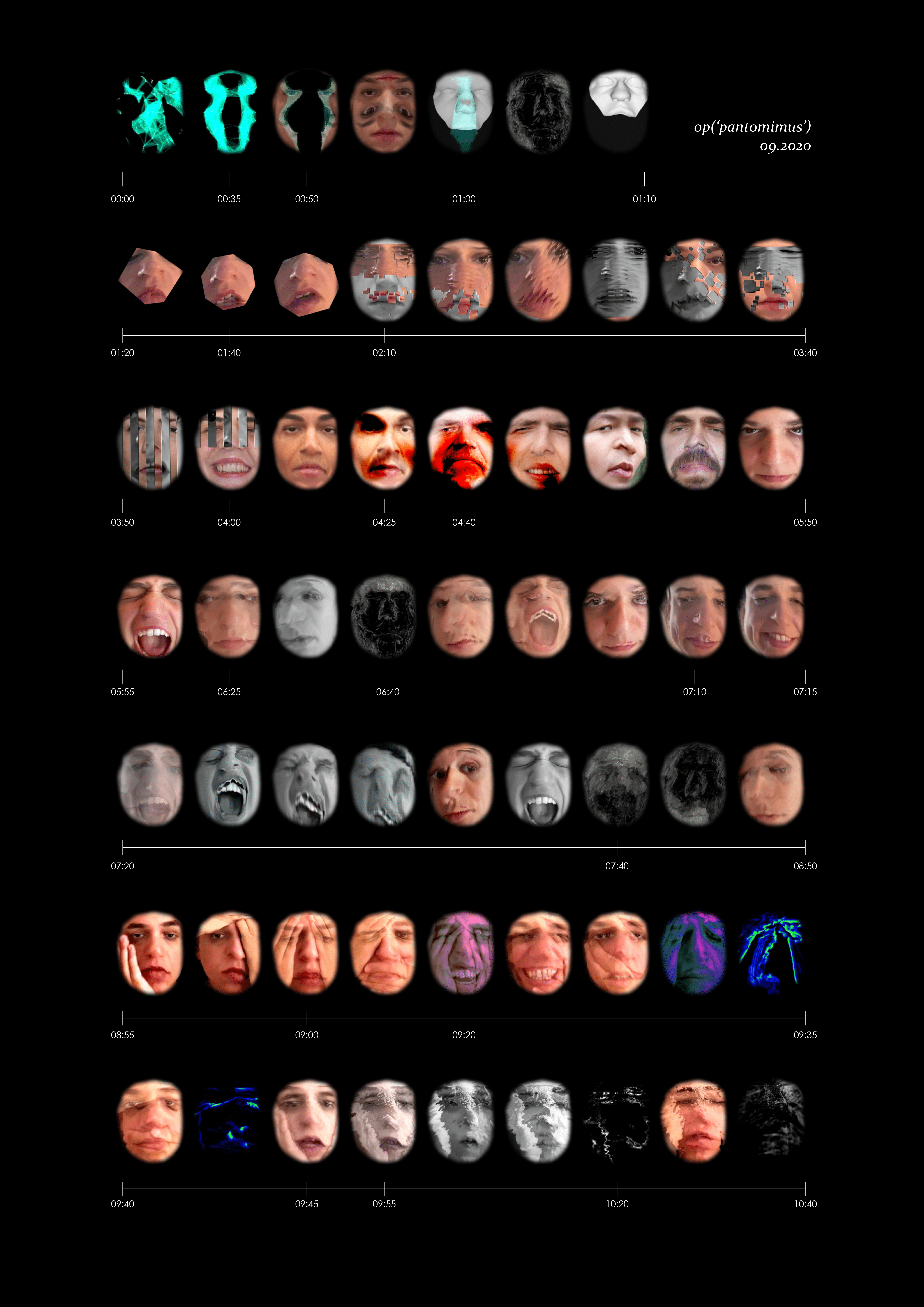

The project combines 3D modelling and 2D imagery to create immersive visual effects. One of the challenges encountered is the computational expense required for face tracking, which demands high-performance GPU. Initially, the intention was to implement real-time render face-hacking visuals. However, in order to ensure stability and maintain a consistent frame rate throughout the performance, a combination of real-time and pre-rendered visual effects was chosen. Regardless, the visual effects remain seamlessly integrated with the live performance.

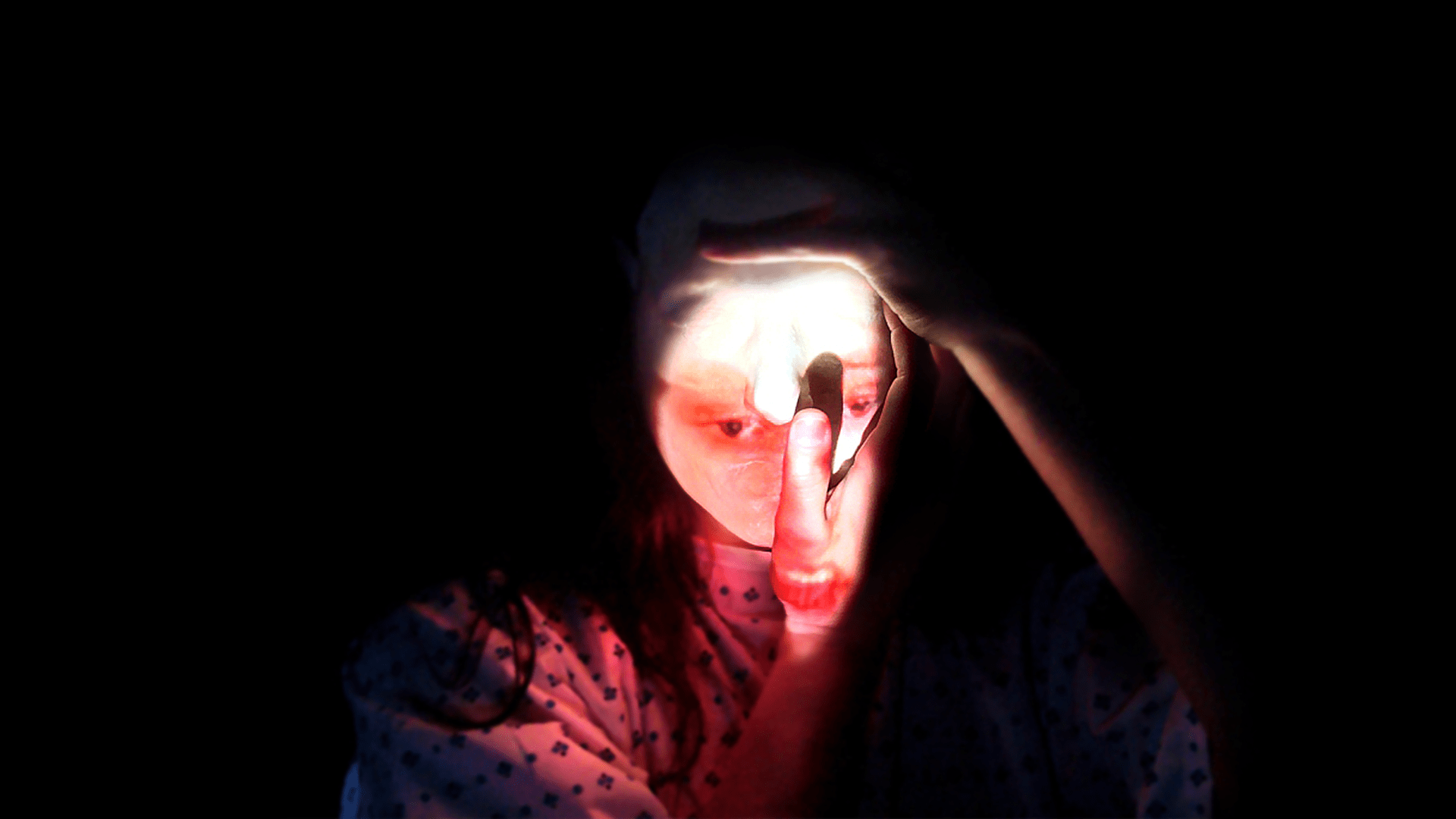

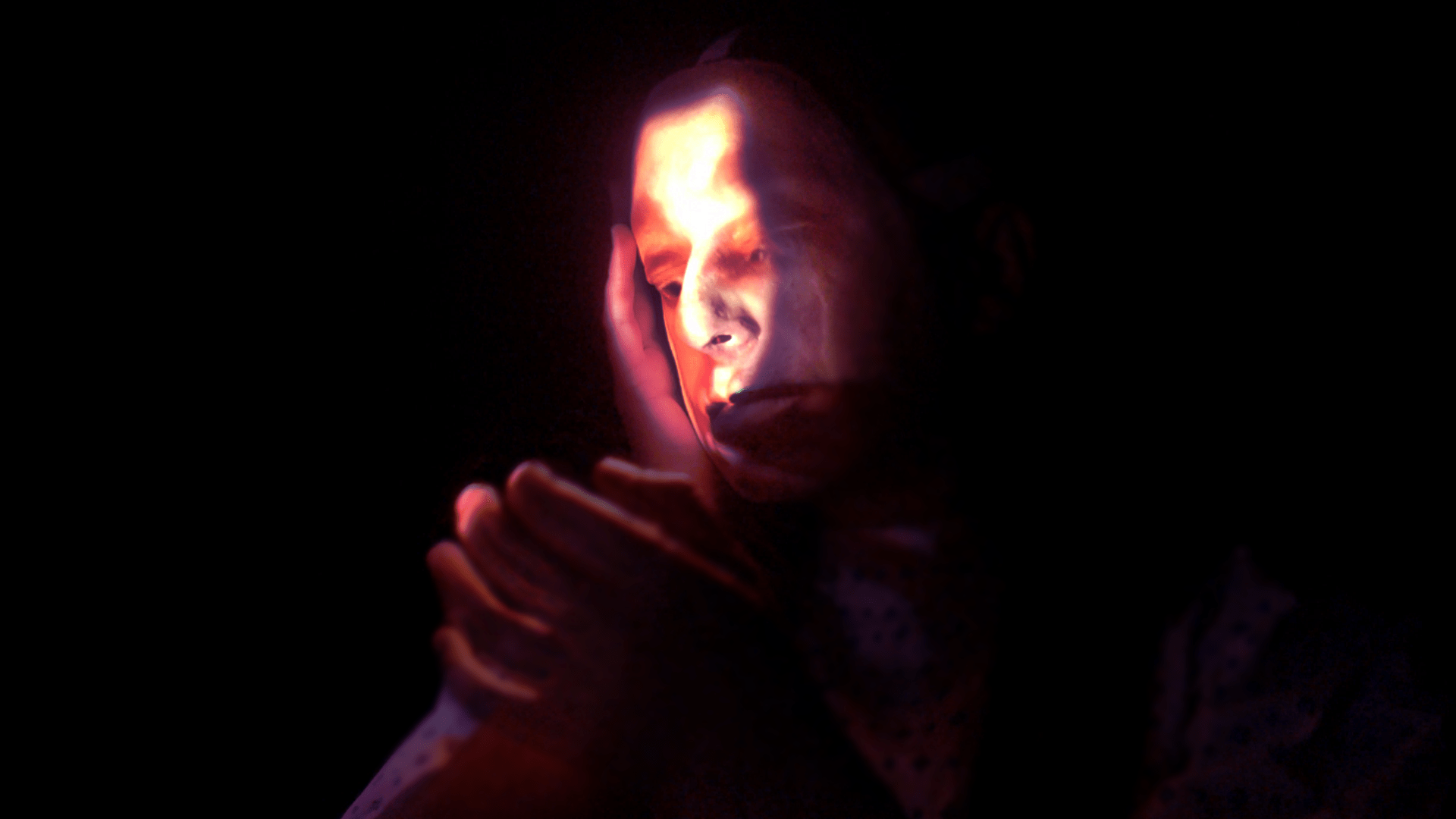

The results

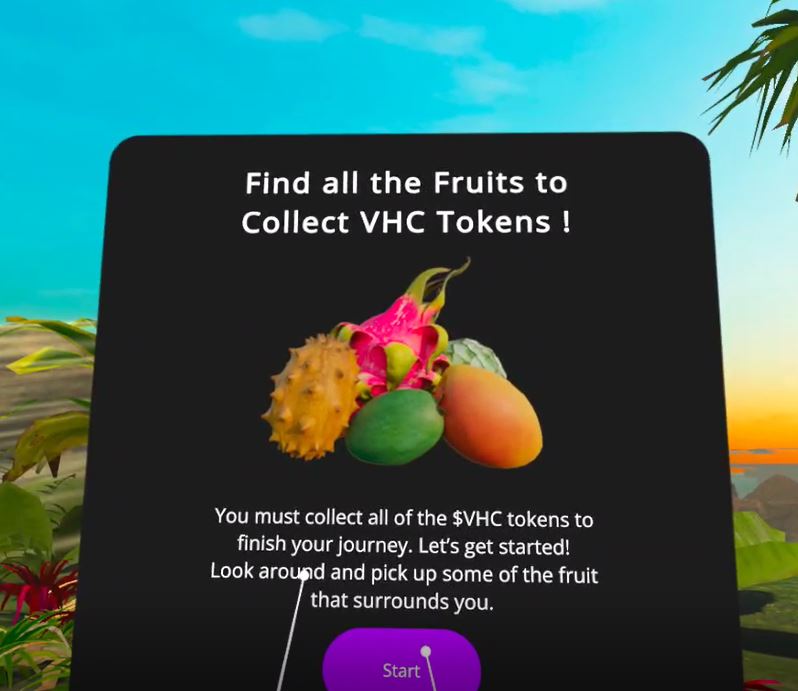

The results is a live performance at Goldsmith of Uniersity in London. Using a custom-built face projection mapping application developed in TouchDesigner, live data is seamlessly incorporated into the performance. The visual components are displayed on a specially designed mask worn by the performer, immersing the audience in a multi-sensory experience. The performance is divided into five distinct phases, each accompanied by a dynamic stereo audio setting. The unique combination of performance, visual, and audio elements creates an unparalleled immersive experience, transporting the audience to a new realm of storytelling. The intimate setting of the performance further enhances the immersive experience, allowing the audience to be fully engrossed in the performance.

Produced By: Andrew Chang

Mask Design: Ray Zheng

Performer: Kristia Morabito

Audio: Christina Karpodini